Welcome to the ah2ac2 Library Documentation

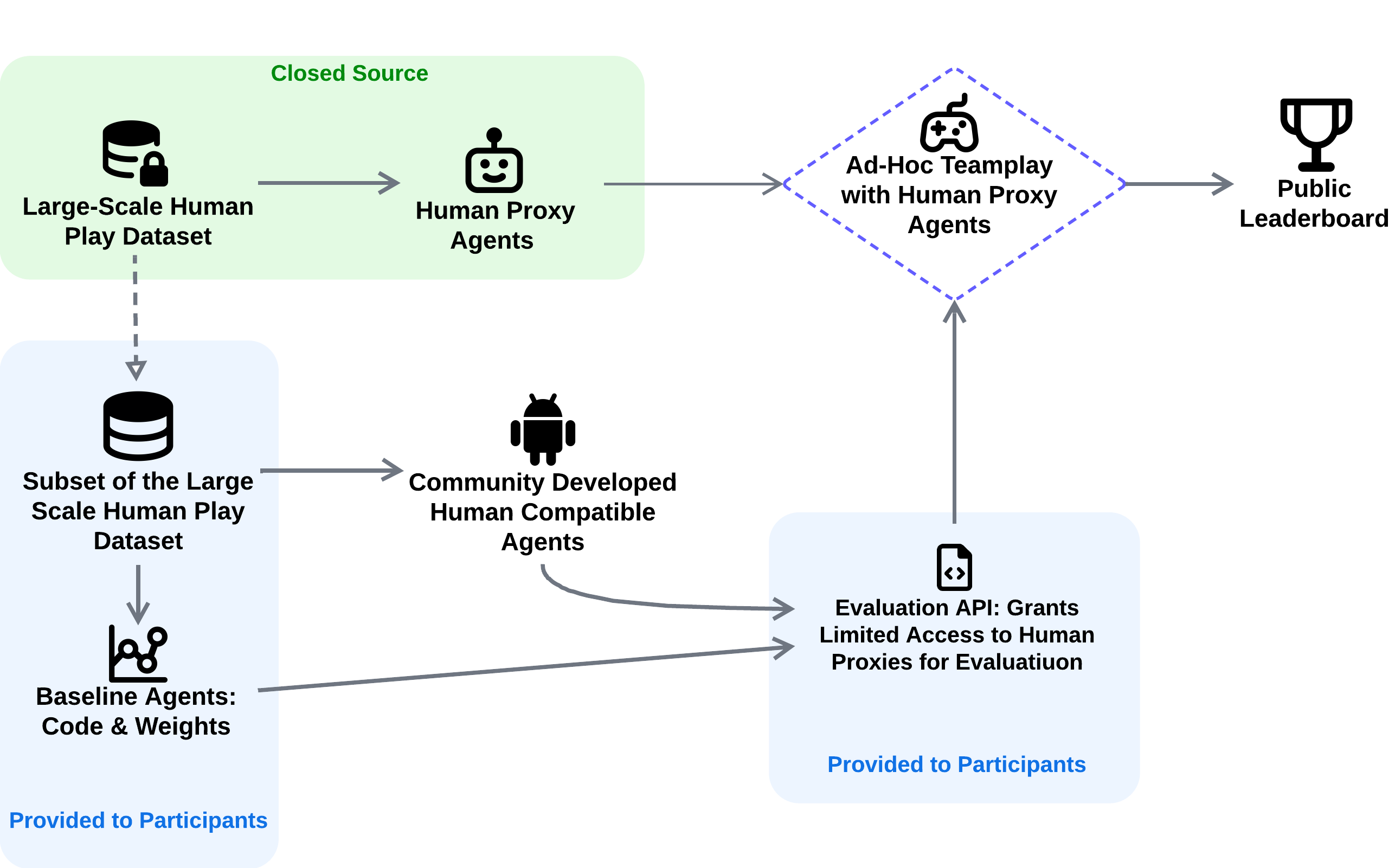

This is the documentation for the Ad-Hoc Human-AI Coordination Challenge (AH2AC2). The objective of AH2AC2 is to facilitate the development of AI agents capable of effective collaboration with human-like partners, especially in scenarios with limited prior interaction data. AH2AC2 is designed to benchmark and drive progress in this area.

Navigating These Docs

This documentation provides comprehensive information for using the ah2ac2 library and understanding how you can participate in the associated challenge. You will find the following sections:

Datasets: Detailed information on the human gameplay data provided, including its structure and access methods.

Evaluation: An overview of the AH2AC2 evaluation protocol, including submission procedures and the metrics used to assess agent performance.

API Reference: A complete reference for the

ah2ac2library's functions, classes, and modules.

The AH2AC2 Challenge

The Ad-Hoc Human-AI Coordination Challenge (AH2AC2) provides a standardized environment for evaluating AI agents on their ability to coordinate with human-like counterparts in Hanabi. The challenge emphasizes data-efficient methods and uses human proxy agents for robust and reproducible evaluation.

For a detailed explanation of the challenge, including methodology and baseline results, please refer to our research paper.

📄 Research Paper: ArXiv

Resources

- 🏆 Public Leaderboard: ah2ac2.com

- 🐍 Codebase: View on GitHub

- 🤗 Dataset: Download from Hugging Face

- 📄 Research Paper: ArXiv

Citation

@inproceedings{

dizdarevic2025adhoc,

title={Ad-Hoc Human-{AI} Coordination Challenge},

author={Tin Dizdarevi{\'c} and Ravi Hammond and Tobias Gessler and Anisoara Calinescu and Jonathan Cook and Matteo Gallici and Andrei Lupu and Jakob Nicolaus Foerster},

booktitle={Forty-second International Conference on Machine Learning},

year={2025},

url={https://openreview.net/forum?id=FuGps5Zyia}

}